ByteDance Unveils Next-Gen AI Robot That Performs Household Tasks

On July 22, 2025, TikTok’s parent company ByteDance unveiled a striking leap in artificial intelligence and robotics by releasing a video demonstration of its new AI-powered robotic system. This new innovation signals a significant move beyond digital apps and into the world of physical automation, showing how AI can now interact with and manipulate the real world in ways that were previously only imagined.

GR-3 and ByteMini: ByteDance’s Vision-Language-Action Robotics

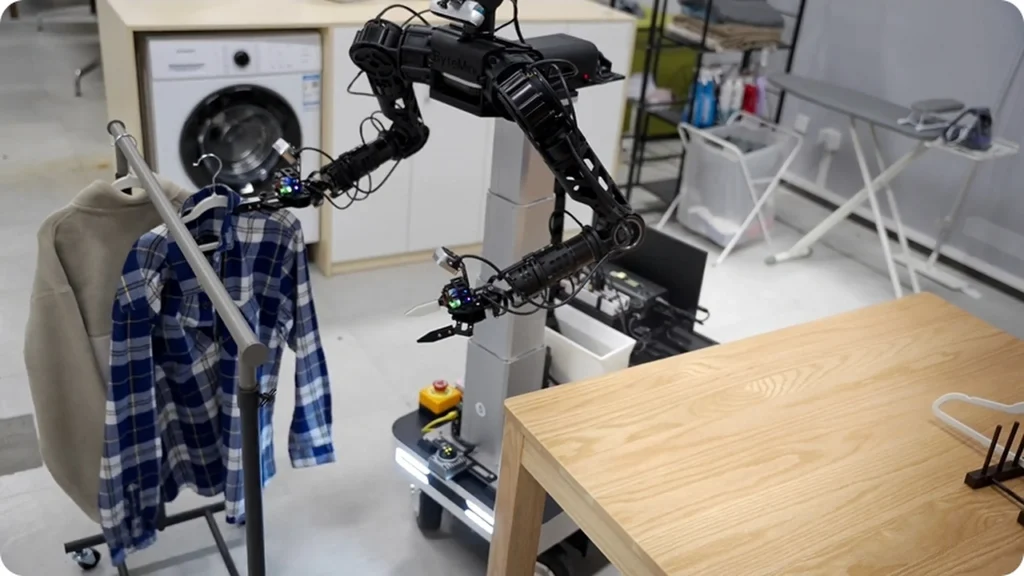

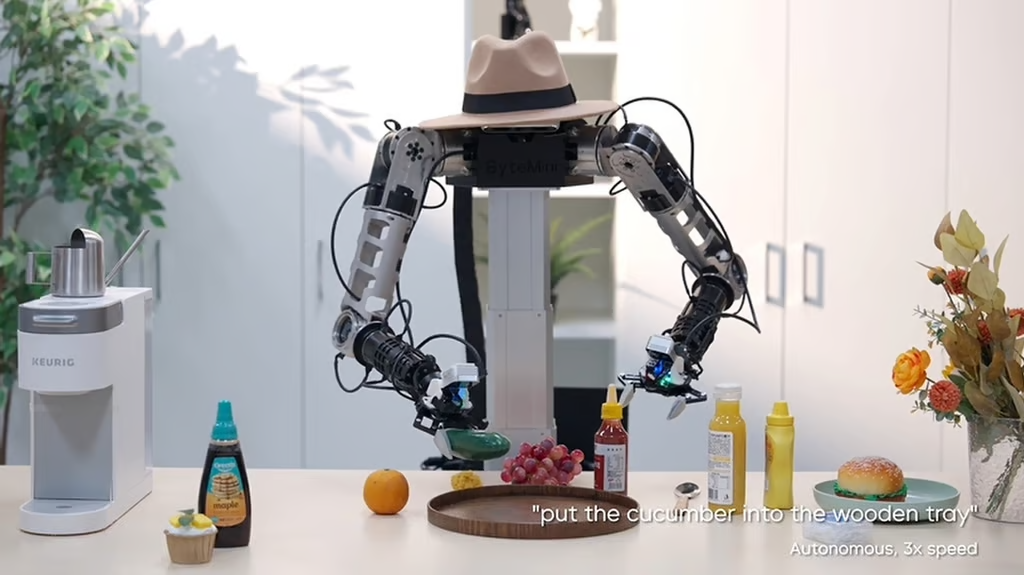

At the heart of ByteDance’s breakthrough is a large-scale model called GR-3, designed as a “vision-language-action” (VLA) system. Unlike traditional robots that follow rigid programming, GR-3 enables robots to interpret spoken or written commands, understand their environment through vision, and then act accordingly. In other words, instead of coding a robot step-by-step, users can simply ask it to perform a task, such as “hang up the clothes” or “clear the table, and the robot will figure out how to do it.

Pairing this brain with a physical body, ByteDance developed BiteMini, a mobile robot that serves as the hardware platform for GR-3. Training the system required a hybrid approach: GR-3 was first fed large volumes of image and text data, then fine-tuned with human interactions in virtual reality, and finally taught to mimic real-world robot movements. This comprehensive training strategy gives GR-3 the adaptability needed to handle complex, unpredictable environments.

Breaking Barriers: The Challenge and Promise of General-Purpose AI Robots

Historically, robots have struggled to perform general tasks in dynamic, everyday environments. Programming a robot to handle the endless variety of objects, situations, and instructions in a home is a formidable challenge. Traditional robots could excel at repetitive or highly structured jobs, but they faltered when faced with uncertainty or new scenarios.

AI models like GR-3 are changing that paradigm. By combining visual perception, language understanding, and learned action, these robots can now interpret human instructions and respond flexibly to the world around them. No longer limited to factory lines, robots powered by VLA models could soon tackle a wide range of household chores and assist in settings like hospitals, hotels, and restaurants. The ability to follow natural language commands and adapt to the unexpected marks a fundamental shift in what’s possible for robotics.

The Future: Evolving Capabilities and Broader Impact

China’s rapid progress in this field suggests that robotics and AI are entering a new era. The fusion of advanced AI models with ever-more capable hardware points toward a future where robots become general-purpose helpers in our daily lives.

As AI models improve and robotics hardware becomes more sophisticated, we may see robots that learn continuously from their environment, personalize their behavior to individual users, and operate safely alongside humans. The line between digital and physical automation will continue to blur, with robots moving from simple automation tools to intelligent, adaptable assistants. These advances could transform not just homes, but industries ranging from elder care to logistics, fundamentally altering how society approaches work and daily living.